Key Takeaways

- Computing has deep historical roots, starting with ancient tools like the Sumerian abacus and devices such as the Antikythera mechanism for calculations.

- Pioneers like Charles Babbage and Ada Lovelace laid the foundation for modern computing, with Babbage designing the Analytical Engine and Lovelace becoming the first programmer.

- The electronic era revolutionized computing, with inventions like the Atanasoff-Berry Computer, Colossus, and ENIAC transforming speed and efficiency through binary arithmetic and programmable machines.

- Key 20th-century milestones include the invention of the transistor, integrated circuits, and microprocessors, leading to smaller, faster machines and the rise of personal computers.

- Technological advancements in computing fueled global progress, from mainframes improving industrial efficiency to the internet and supercomputing reshaping connectivity, research, and communication.

- Early computing breakthroughs had far-reaching impacts, fostering scientific discoveries, transforming industries, and democratizing access to technology worldwide.

When I think about how much we rely on computers today, it’s hard to imagine a time when they didn’t exist. From smartphones to complex supercomputers, they’re everywhere, shaping the way we live, work, and connect. But have you ever stopped to wonder when it all began? When was computing actually invented?

The story of computing isn’t tied to a single moment or invention—it’s a fascinating journey that spans centuries. From ancient tools used for calculations to groundbreaking machines that laid the foundation for modern technology, the evolution of computing is full of surprising twists. Let’s take a closer look at how it all started.

Early Origins Of Computing

Long before modern computers, humans developed methods and tools to solve mathematical problems and process information. These early innovations laid the groundwork for the computing systems we rely on today.

Ancient Tools And Methods

The earliest forms of computation can be traced back around 2400 BCE with the Sumerian abacus. Ancient civilizations, including the Greeks and Chinese, created devices like the Antikythera mechanism and variations of abaci for calculations. These tools were primarily used to simplify arithmetic operations for trade, astronomy, and engineering. For example, the Roman abacus enabled merchants to perform addition and subtraction efficiently during transactions. Writing systems and counting boards also supported early data organization.

The Mechanical Era: 17th To 19th Centuries

During the 17th century, inventors created mechanical devices to automate calculations. Blaise Pascal developed the Pascaline in 1642, one of the first mechanical calculators designed to add and subtract numbers. Gottfried Wilhelm Leibniz improved this innovation by inventing the Leibniz Wheel in the late 1600s, capable of performing multiplication and division.

By the 19th century, Charles Babbage designed the Difference Engine to compute complex mathematical tables accurately. Later, he conceptualized the Analytical Engine, an early general-purpose computing machine that introduced features like programmable punch cards and a ‘memory’ unit. Ada Lovelace, working on the engine’s programming, became the first individual to publish an algorithm intended for a machine, marking a critical milestone in computer science history.

The Birth Of Modern Computing

Modern computing began to take shape during the 19th century, marked by groundbreaking ideas and inventions that laid its foundation. Pioneering figures like Charles Babbage and Ada Lovelace played key roles in shaping its future.

Charles Babbage And The Analytical Engine

Charles Babbage, often called the “father of the computer,” designed the Analytical Engine in 1837. It was a mechanical general-purpose computing device. Unlike earlier calculators, the Engine featured an arithmetic logic unit, memory, and control for conditional operations—essential components of modern computers.

Babbage’s design allowed for the automatic execution of complex instructions. Though never fully built during his lifetime, the Analytical Engine’s concept directly influenced later developments in computing machinery. Its use of punched cards for programming inspired subsequent technologies used in the 20th century.

Ada Lovelace: The First Programmer

Ada Lovelace worked with Babbage on the Analytical Engine and is widely recognized as the first programmer. In 1843, she wrote detailed notes, including an algorithm designed to be executed by the Engine. This algorithm is considered the first instance of computer programming.

Lovelace’s notes also included visionary insights about the potential of computing beyond pure mathematics. She predicted that such machines could process non-numerical data, like music or graphics, demonstrating a deep understanding of theoretical computing. Her contributions established an essential foundation for programming concepts used today.

The Electronic Age Of Computing

The shift to electronic computing marked a transformative era. Complex problems could now be solved faster, with innovations paving the way for the modern digital world.

The Invention Of The First Computers

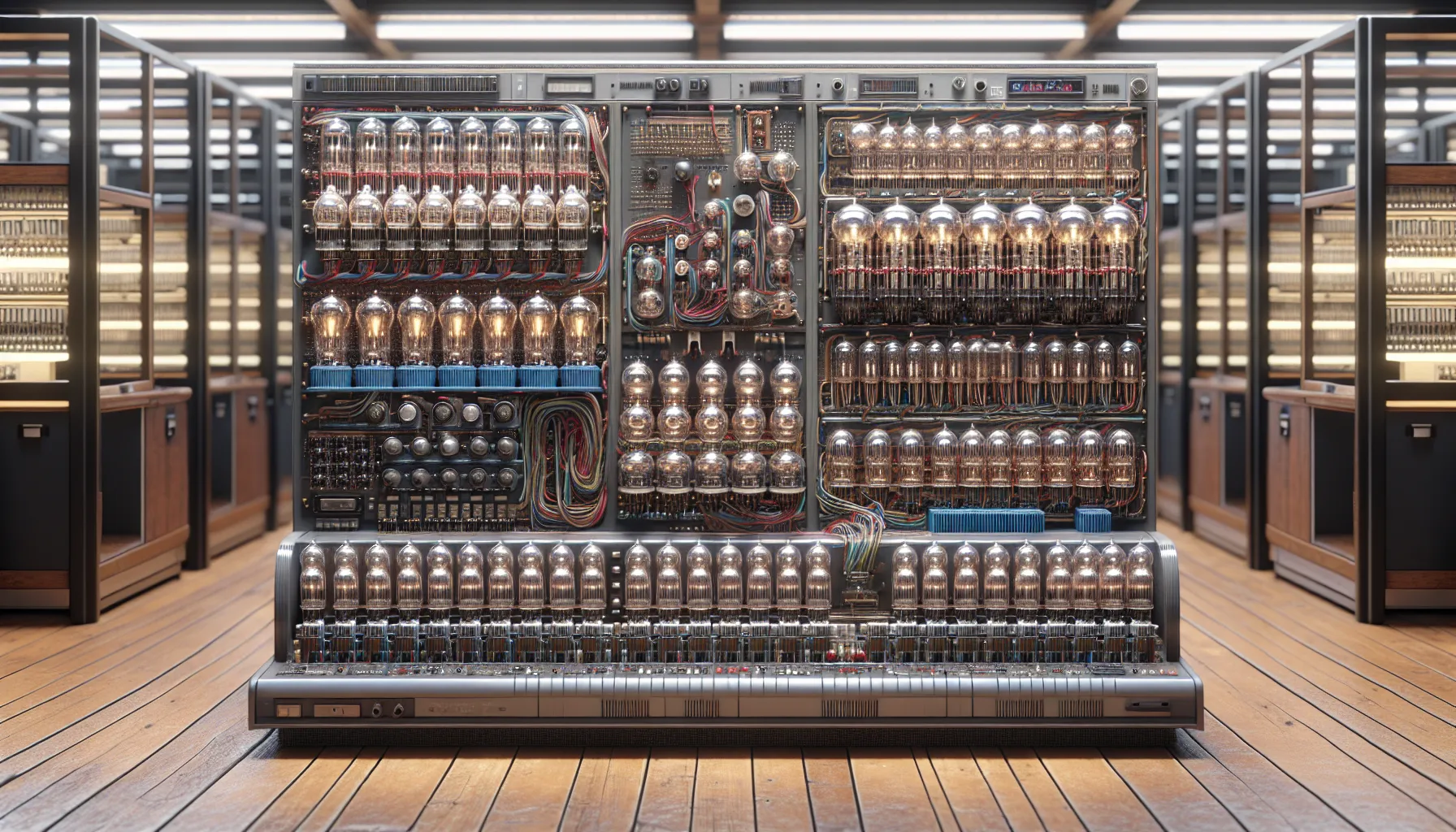

Electronics revolutionized computing with the creation of devices like the Atanasoff-Berry Computer (ABC) in 1937. The ABC, designed by John Atanasoff and Clifford Berry, introduced binary arithmetic and electronic switches. Shortly after, the British Colossus, developed in 1943, became the first programmable digital computer, used for codebreaking during World War II. In 1945, ENIAC (Electronic Numerical Integrator and Computer), regarded as the first general-purpose electronic computer, was unveiled in the United States, performing complex calculations at unprecedented speeds.

Key Milestones In The 20th Century

Several pivotal developments defined computing’s electronic age. In 1947, the invention of the transistor enabled smaller, faster devices, replacing vacuum tubes. By 1958, Jack Kilby introduced the integrated circuit, a foundation for modern microprocessors. The 1960s saw the rise of time-sharing systems, enabling multiple users to access a single computer. In 1971, Intel released the 4004, the first microprocessor, integrating key components into a single chip. By the late 20th century, personal computers like the Apple II and IBM PC democratized computing, bringing technology to homes and businesses worldwide.

Evolution Of Computing Technologies

The evolution of computing technologies has seen remarkable progress, transforming from large, specialized machines into essential tools for everyday life. Advances in hardware, software, and connectivity have continuously redefined the capabilities of computers.

From Mainframes To Personal Computers

Mainframes, introduced in the 1950s, revolutionized data processing for businesses and government institutions. IBM’s System/360 series, launched in 1964, set a standard for compatibility and mass adoption. These machines occupied entire rooms and required dedicated operators for multi-user processing.

The shift to personal computing began in the 1970s. Microprocessors like the Intel 4004 enabled compact, affordable machines. The Apple II, introduced in 1977, offered user-friendly software and became a commercial success. IBM entered the personal computer market in 1981 with the IBM PC, which featured MS-DOS and sparked widespread adoption in homes and offices.

The Rise Of The Internet And Supercomputing

The 1990s marked the expansion of the internet, transforming communication and data sharing. Tim Berners-Lee’s World Wide Web, introduced in 1989, simplified access to online resources. Browsers like Netscape Navigator brought the internet into mainstream use by the mid-1990s.

Supercomputing, essential for high-performance tasks, evolved alongside personal computing. Cray Research introduced the Cray-1 in 1976, achieving speeds of up to 160 MFLOPS. Modern supercomputers, measured in exaFLOPS, support advanced simulations, Artificial Intelligence (AI), and climate research. Supercomputing power has become integral for handling massive datasets and solving complex problems.

Impact Of Early Computing Inventions

Early computing inventions created a foundation that influenced scientific research, transformed industries, and shaped societal progress. These breakthroughs laid the groundwork for innovation across multiple domains.

Scientific Advancements

Computing inventions revolutionized how researchers approached complex problems. Devices like the Antikythera mechanism enabled ancient astronomers to predict celestial events, fostering advancements in navigation and calendar systems. The Analytical Engine, designed by Charles Babbage, introduced the concept of programmable machines, inspiring scientific exploration in mathematics and engineering.

The transition to electronic computers significantly accelerated scientific research. The ENIAC, unveiled in 1945, executed calculations previously requiring weeks in a fraction of the time. Scientists used early computers to model weather patterns, simulate nuclear reactions, and analyze genetic data, enabling groundbreaking discoveries. As computational power increased, fields like physics, chemistry, and biology saw exponential growth in research capabilities.

Social And Industrial Transformation

Computing inventions transformed industries and improved efficiency in production, trade, and communication. Mechanical calculators such as Blaise Pascal’s Pascaline enhanced bookkeeping for merchants, streamlining arithmetic-based tasks. By the 20th century, early electronic systems like the UNIVAC increased productivity for industries by automating statistical analysis and business processes.

Society benefitted from computing’s integration into daily life. The rise of mainframe systems in the 1950s allowed companies to process payrolls, manage inventory, and maintain databases. The 1970s microprocessor-driven shift introduced personal computers, democratizing access to technology. With computers readily available, education, healthcare, and finance saw advancements that connected individuals and businesses on a global scale.

Conclusion

Computing’s journey is a testament to human ingenuity and our relentless drive to solve problems and innovate. From ancient tools to modern supercomputers, each step has contributed to the technology we rely on every day. It’s incredible to think how far we’ve come, and yet, the story of computing is far from over.

As we continue to push boundaries with AI, quantum computing, and beyond, it’s clear that the evolution of technology will keep shaping our world in unimaginable ways. Exploring its origins not only helps us appreciate the progress we’ve made but also inspires us to dream about what’s next.

Frequently Asked Questions

What is the origin of computing?

The origins of computing date back to ancient times with tools like the Sumerian abacus, created around 2400 BCE, used for basic arithmetic operations. Early civilizations, such as the Greeks and Chinese, also developed computation devices like the Antikythera mechanism to solve mathematical problems, laying the foundation for modern computing.

Who is considered the “father of the computer”?

Charles Babbage is often called the “father of the computer” for designing the Analytical Engine in 1837. Although it was never built during his lifetime, the design included essential components of modern computers, such as an arithmetic logic unit and memory.

Why is Ada Lovelace significant in computing history?

Ada Lovelace is celebrated as the first computer programmer. She worked with Charles Babbage and wrote detailed notes, including an algorithm for the Analytical Engine. Her work demonstrated computing’s potential beyond mathematics and laid the groundwork for modern programming concepts.

What was the first programmable digital computer?

The British Colossus, developed in 1943, was the first programmable digital computer. It was used during World War II to break enemy codes and marked a significant advancement in computer technology.

What role did ENIAC play in computing?

ENIAC (Electronic Numerical Integrator and Computer), introduced in 1945, was the first general-purpose electronic computer. It performed complex calculations at unprecedented speeds, revolutionizing computing for research and industry.

What were key breakthroughs in computing during the 20th century?

Key milestones included the invention of the transistor (1947), the integrated circuit (1958), and the Intel 4004 microprocessor (1971). These innovations paved the way for smaller, faster, and more efficient computing devices, transforming the industry.

How did personal computers change technology?

Personal computers, such as the Apple II (1977) and IBM PC (1981), revolutionized technology by making computing accessible to individuals and businesses. These user-friendly machines brought computing power into homes and workplaces, driving innovation across industries.

How did the internet impact computing?

The rise of the internet in the 1990s transformed communication and data sharing. It created a global network that connected devices and users, enabling advancements in collaboration, research, and commerce.

What is the importance of supercomputing?

Supercomputers, like the Cray-1, introduced in 1976, and modern systems, are essential for tasks requiring immense computational power. They support advanced simulations, AI development, and scientific research, solving complex problems and processing massive datasets.

How has computing impacted industries and research?

Computing has revolutionized industries like education, healthcare, and finance by improving efficiency, communication, and innovation. In research, it has accelerated discoveries in fields such as medicine, engineering, and astrophysics, enabling more complex data analysis and modeling.